Pre-training vs Fine-Tuning vs In-Context Learning of Large

$ 31.99 · 4.6 (142) · In stock

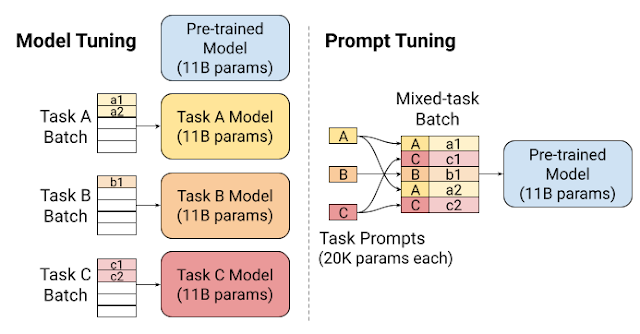

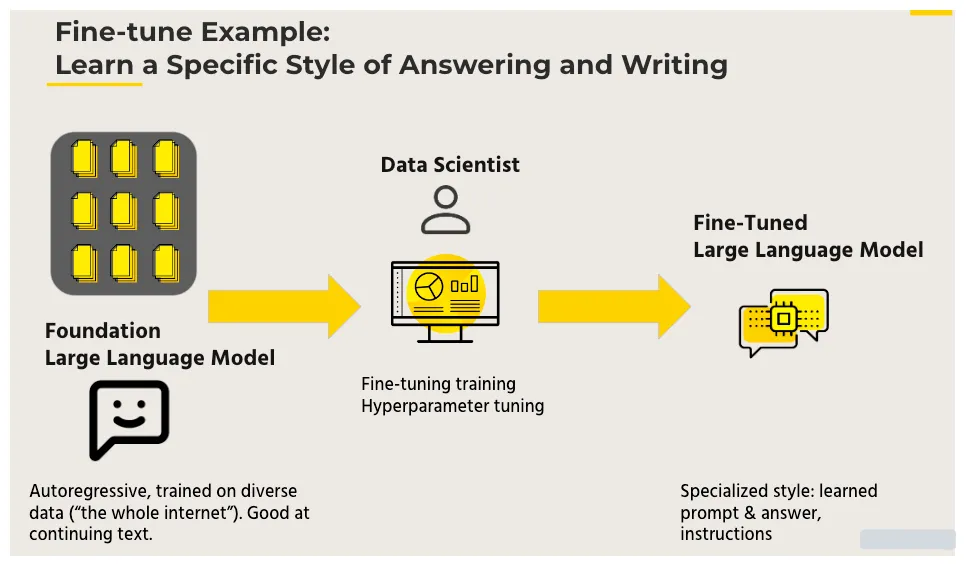

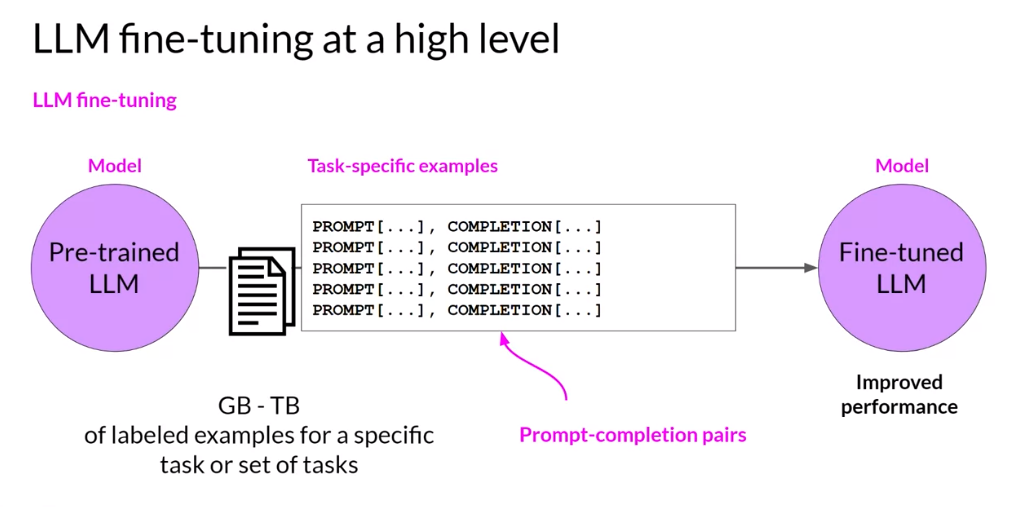

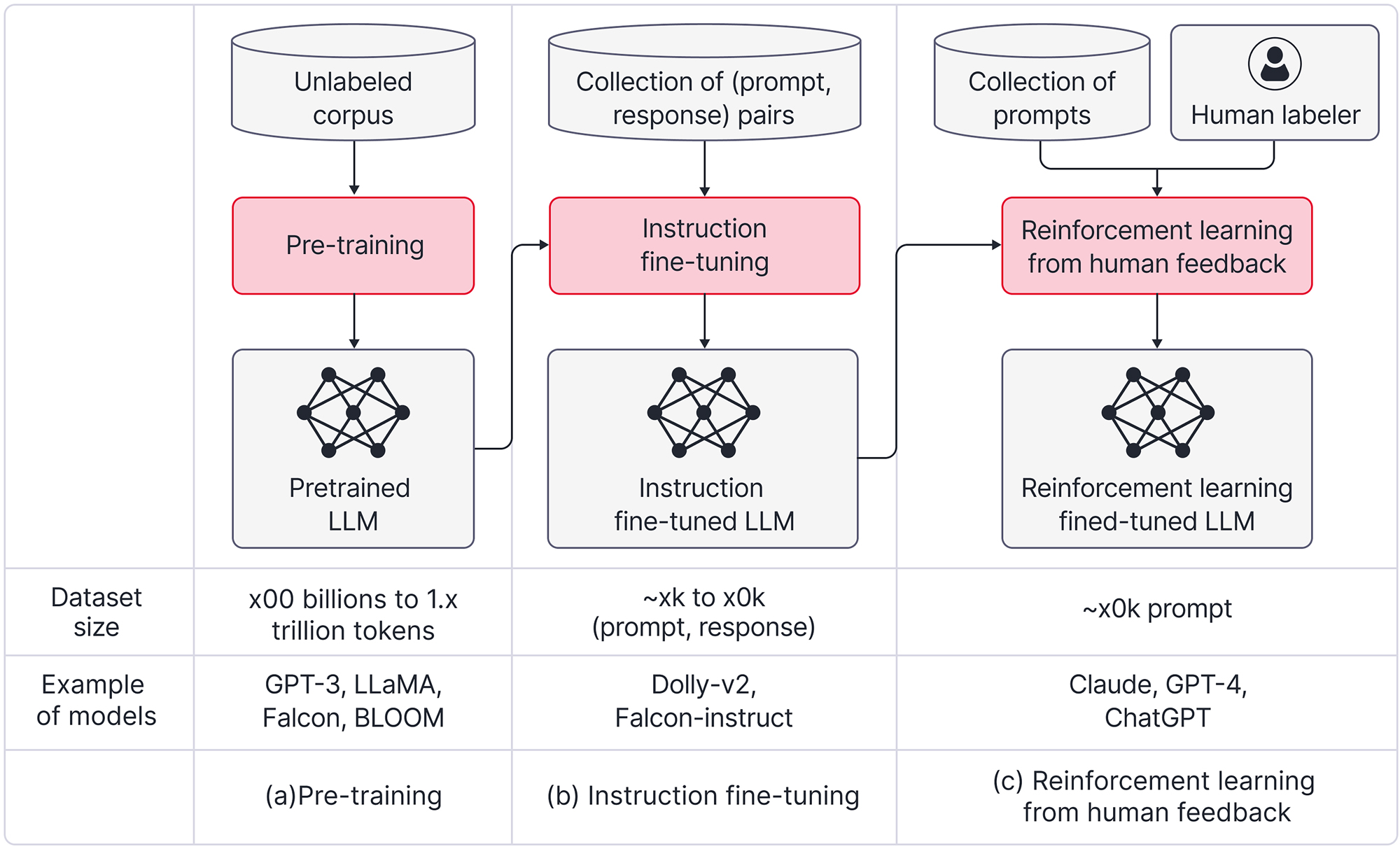

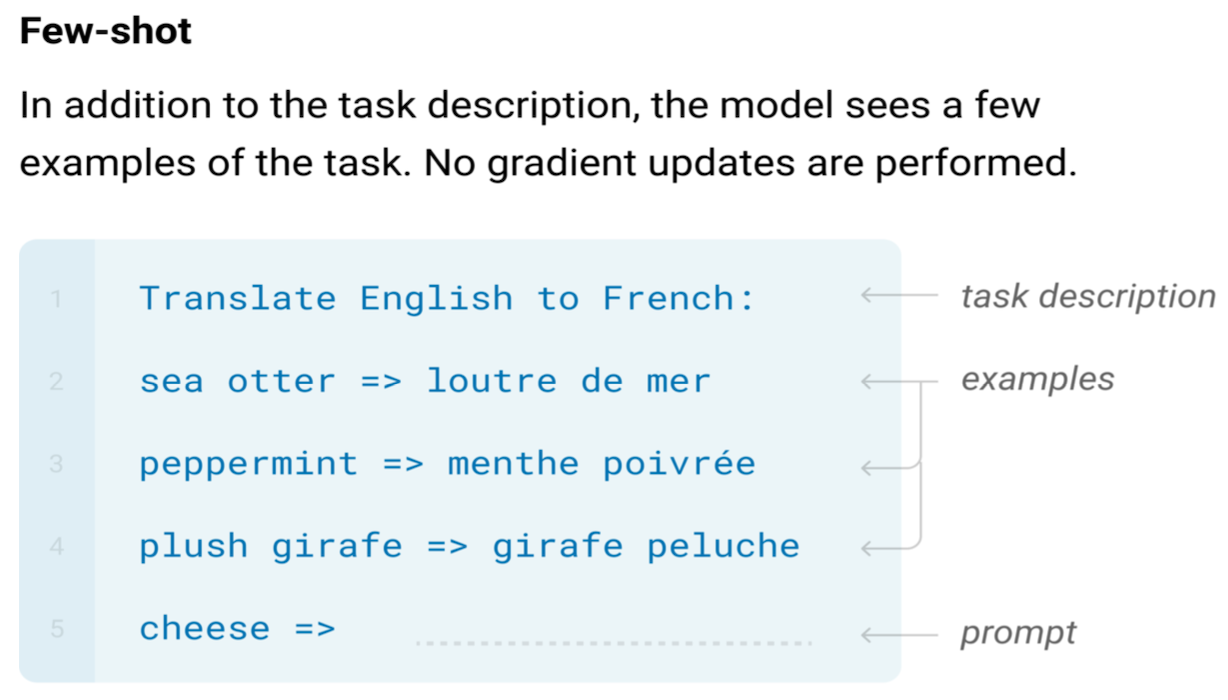

Large language models are first trained on massive text datasets in a process known as pre-training: gaining a solid grasp of grammar, facts, and reasoning. Next comes fine-tuning to specialize in particular tasks or domains. And let's not forget the one that makes prompt engineering possible: in-context learning, allowing models to adapt their responses on-the-fly based on the specific queries or prompts they are given.

Large Language Models: An Introduction to Fine-Tuning and Specialization in LLMs

The complete guide to LLM fine-tuning - TechTalks

![]()

When should you fine-tune your LLM? (in 2024) - UbiOps - AI model serving, orchestration & training

Can prompt engineering methods surpass fine-tuning performance with pre-trained large language models?, by lucalila

Fine-tuning large language models (LLMs) in 2024

Pre-training, fine-tuning and in-context learning in Large Language Models (LLMs), by Kushal Shah

Everything You Need To Know About Fine Tuning of LLMs

In-Context Learning and Fine-Tuning for a Language Model

Introduction to LLMs and the generative AI : Part 3— Fine Tuning LLM with Instruction and Evaluation Benchmarks, by Yash Bhaskar

A High-level Overview of Large Language Models - Borealis AI

Pre-training vs Fine-Tuning vs In-Context Learning of Large

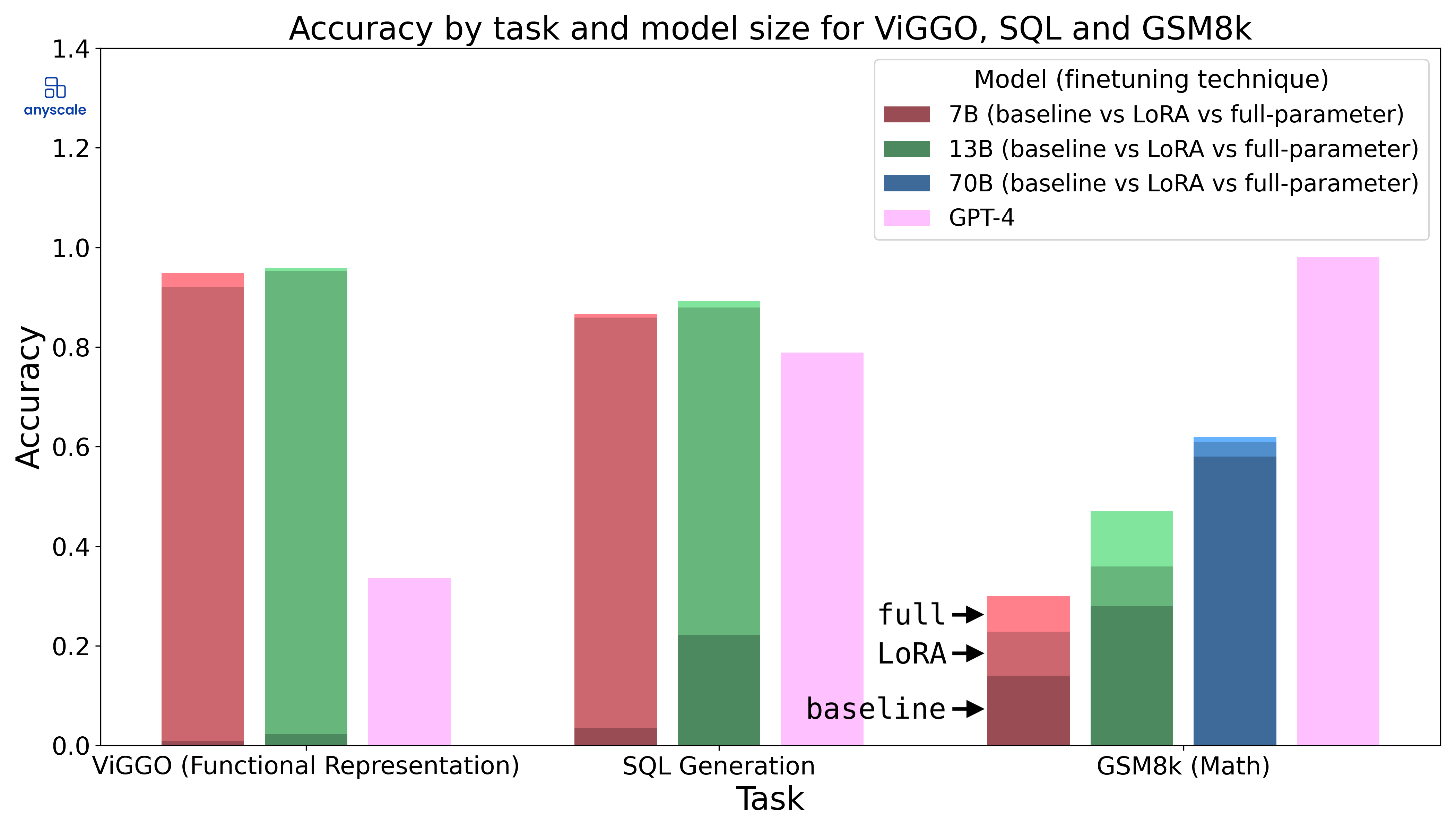

Fine-Tuning LLMs: In-Depth Analysis with LLAMA-2

In-Context Learning, In Context

Articles Entry Point AI