Red Pajama 2: The Public Dataset With a Whopping 30 Trillion Tokens

$ 14.50 · 4.9 (212) · In stock

Together, the developer, claims it is the largest public dataset specifically for language model pre-training

.png?width=700&auto=webp&quality=80&disable=upscale)

NLP recent news, page 7 of 30

Shamane Siri, PhD on LinkedIn: RedPajama-Data-v2: an Open Dataset with 30 Trillion Tokens for Training…

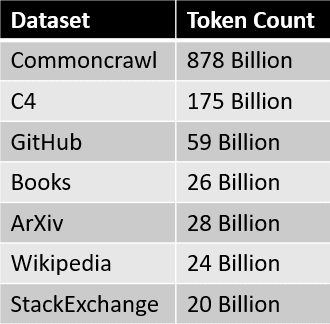

What's in the RedPajama-Data-1T LLM training set

ChatGPT / Generative AI recent news, page 3 of 19

Top 10 List of Large Language Models in Open-Source

Leaderboard: OpenAI's GPT-4 Has Lowest Hallucination Rate

LLaMA clone: RedPajama – first open-source decentralized AI with open dataset

ChatGPT / Generative AI recent news, page 3 of 19

2311.17035] Scalable Extraction of Training Data from (Production) Language Models

RedPajama Project: An Open-Source Initiative to Democratizing LLMs - KDnuggets

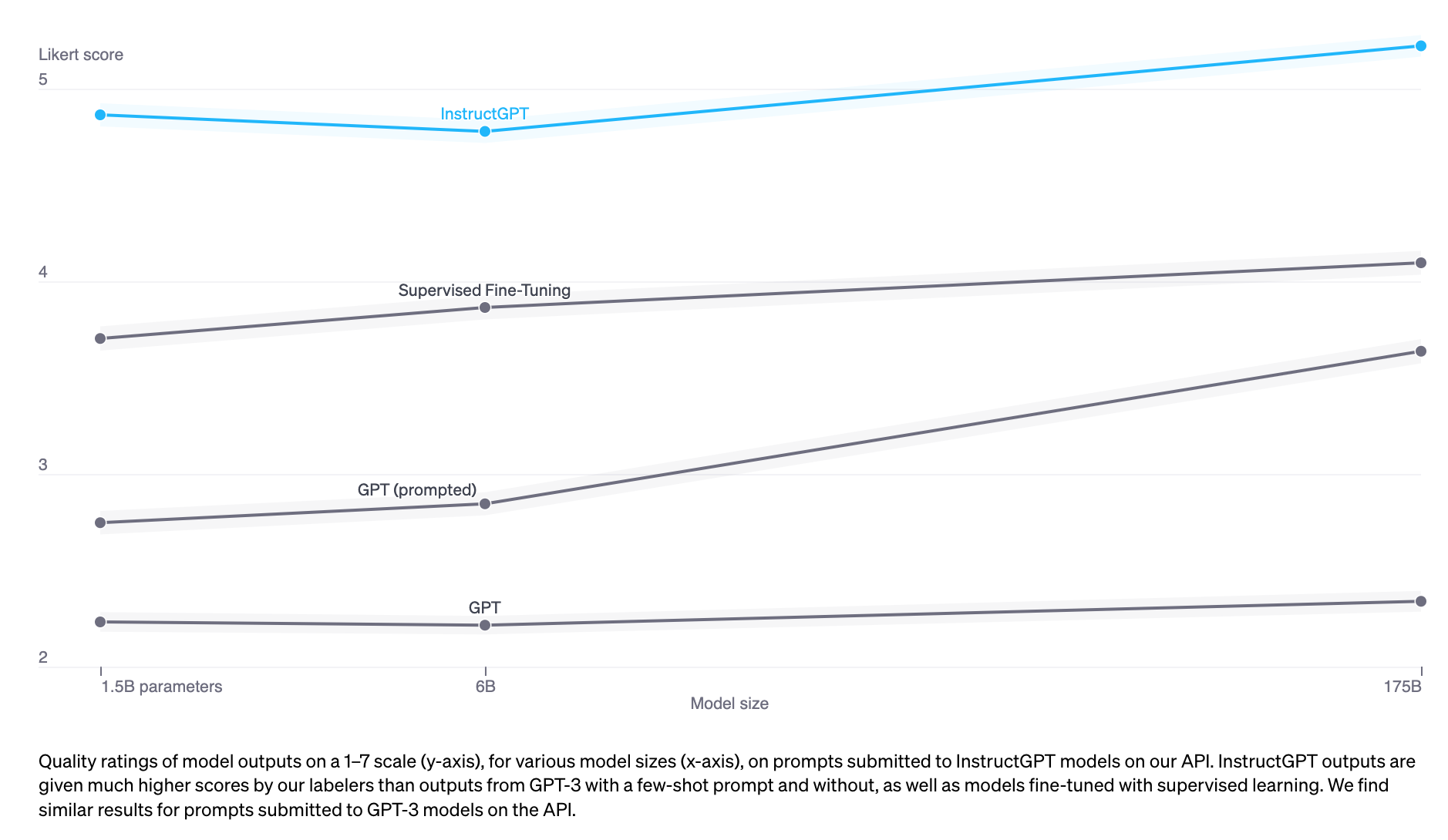

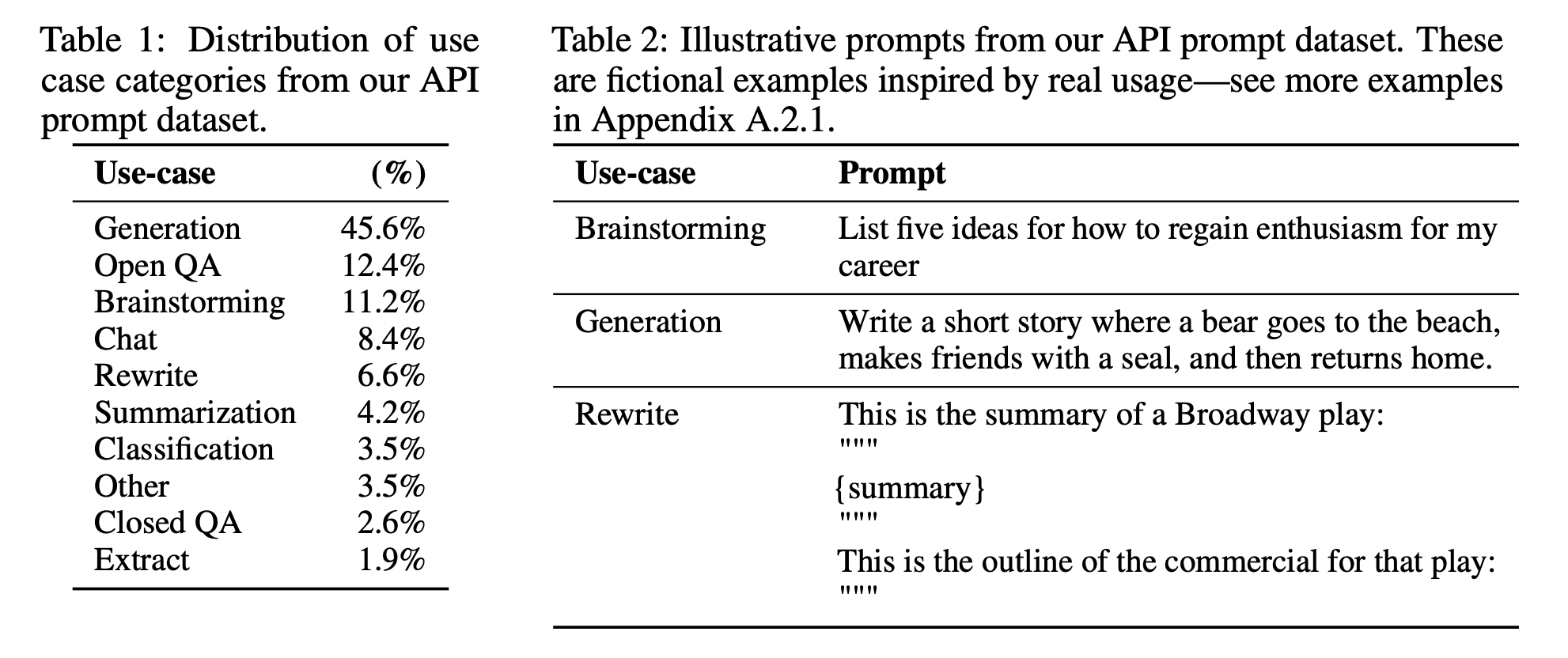

RLHF: Reinforcement Learning from Human Feedback

RLHF: Reinforcement Learning from Human Feedback

RedPajama, a project to create leading open-source models, starts by reproducing LLaMA training dataset of over 1.2 trillion tokens