HumanML3D Dataset

$ 33.99 · 4.8 (265) · In stock

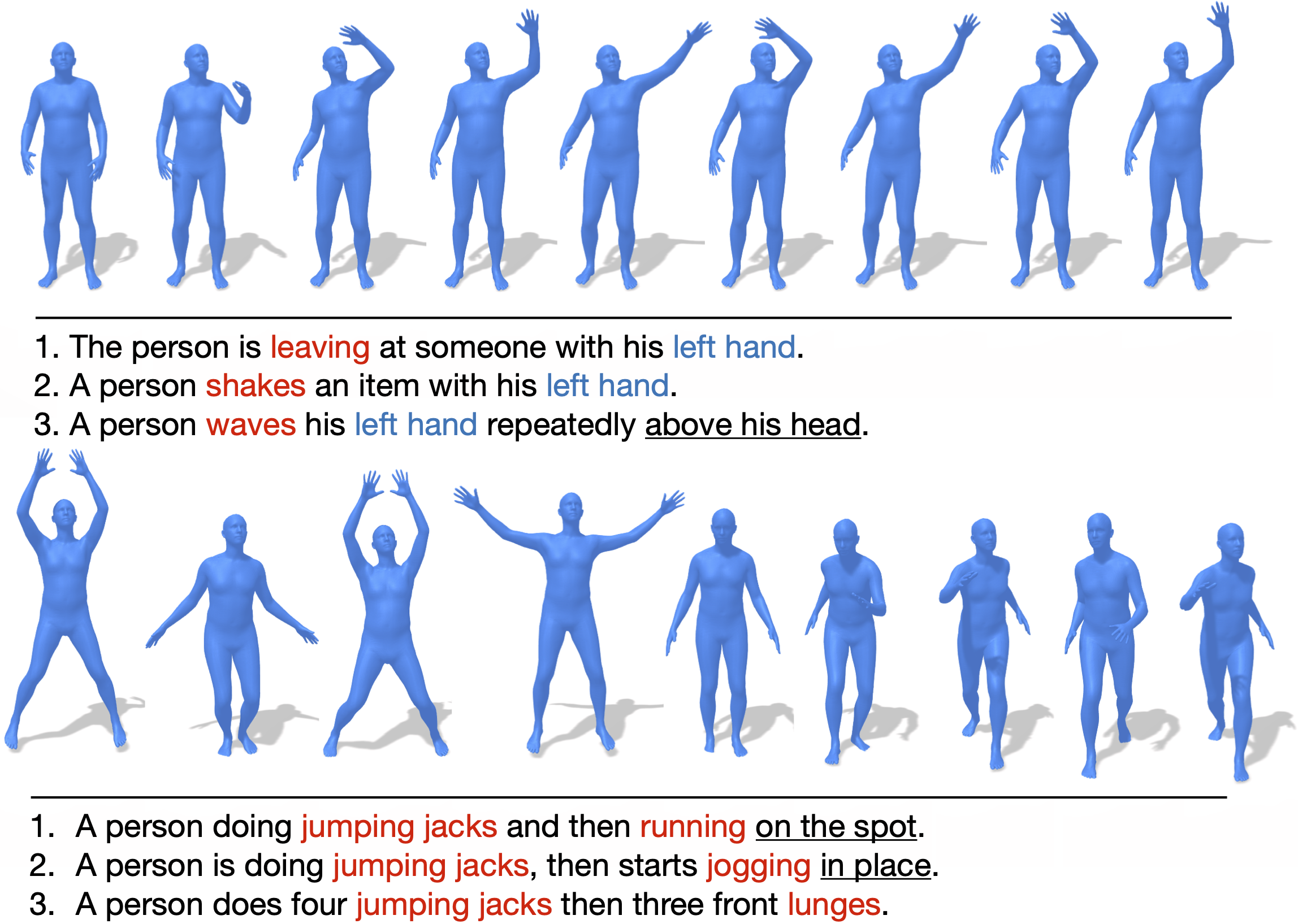

HumanML3D is a 3D human motion-language dataset that originates from a combination of HumanAct12 and Amass dataset. It covers a broad range of human actions such as daily activities (e.g., 'walking', 'jumping'), sports (e.g., 'swimming', 'playing golf'), acrobatics (e.g., 'cartwheel') and artistry (e.g., 'dancing'). Overall, HumanML3D dataset consists of 14,616 motions and 44,970 descriptions composed by 5,371 distinct words. The total length of motions amounts to 28.59 hours. The average motion length is 7.1 seconds, while average description length is 12 words.

Guo_Generating_Diverse_and_CVPR_2022_supplemental, PDF, Probability Distribution

Ling-Hao CHEN's Homepage

T2M-GPT: Generating Human Motion from Textual Descriptions with Discrete Representations: Paper and Code - CatalyzeX

arxiv-sanity

Generate Movement from Text Descriptions with T2M-GPT - Voxel51

Human Motion Diffusion Model

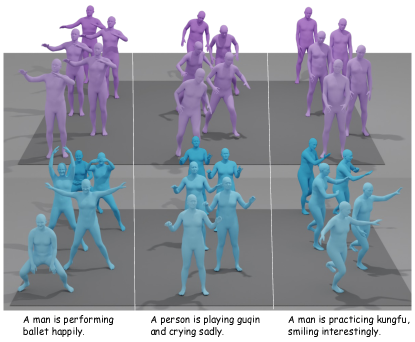

InterGen: Diffusion-Based Multi-human Motion Generation Under Complex Interactions

Rob Sloan on LinkedIn: #dataset #machinelearning #researchanddevelopment

arxiv-sanity

Experiments of MotionGPT (Spring 2023) - Human Motion Synthesis

MotionGPT: Human Motion Synthesis With Improved Diversity and Realism via GPT-3 Prompting

Cross-Modal Retrieval for Motion and Text via DropTriple Loss

2307.00818] Motion-X: A Large-scale 3D Expressive Whole-body Human Motion Dataset

arxiv-sanity