Two-Faced AI Language Models Learn to Hide Deception

$ 25.00 · 4.9 (658) · In stock

(Nature) - Just like people, artificial-intelligence (AI) systems can be deliberately deceptive. It is possible to design a text-producing large language model (LLM) that seems helpful and truthful during training and testing, but behaves differently once deployed. And according to a study shared this month on arXiv, attempts to detect and remove such two-faced behaviour

Has ChatGPT been steadily, successively improving its answers over time and receiving more questions?

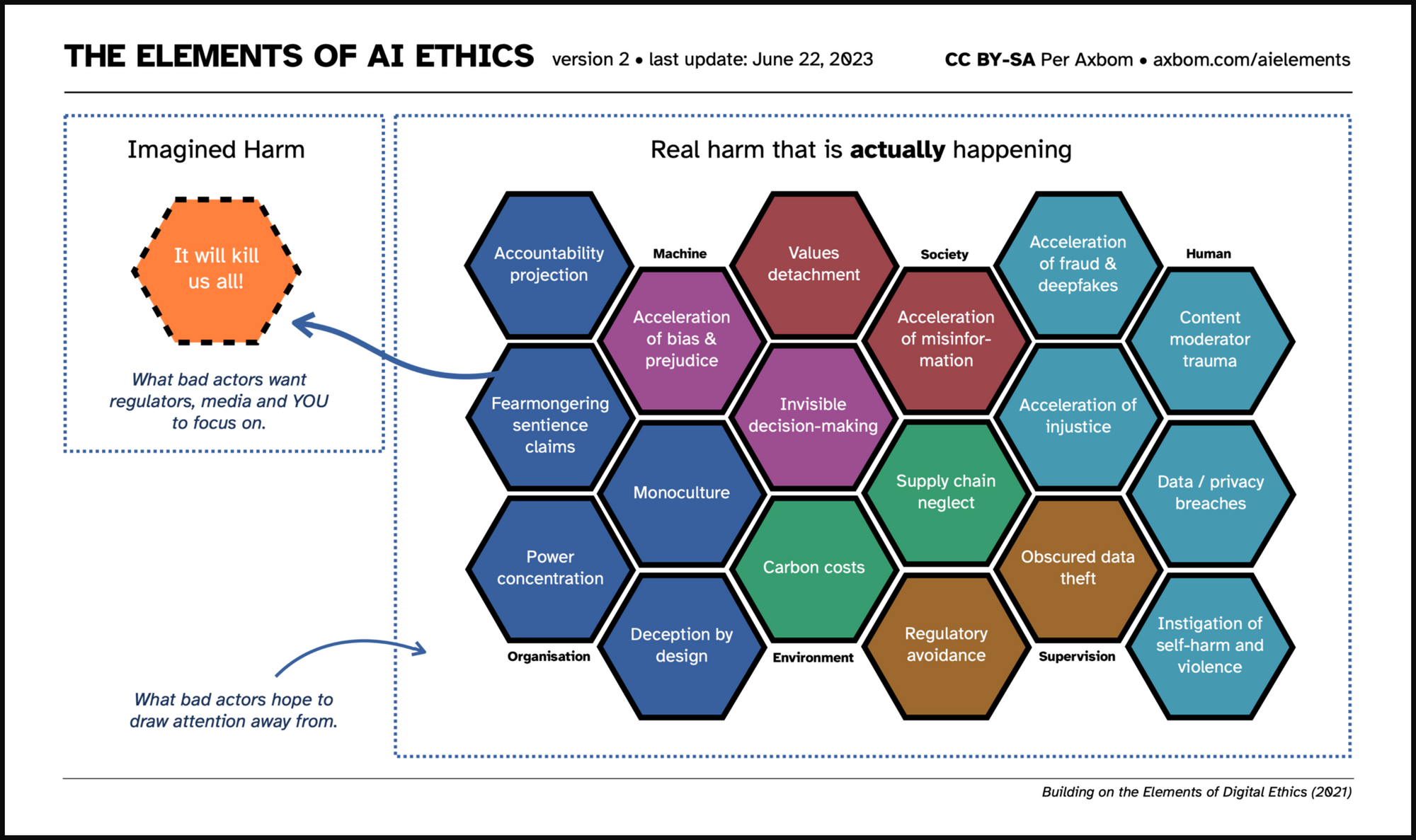

The Elements of AI Ethics

ChatGPT: deconstructing the debate and moving it forward

What are the 20 advantages and disadvantages of artificial intelligence that every person should know? - Quora

Why it's so hard to end homelessness in America. Source: The Harvard Gazette. Comment: Time for Ireland and especially our politicians, in this election year and taking note of the 100,000+ thousand

Katherine Bassil on X: That's concerning Two-faced AI language models learn to hide deception / X

Neural Profit Engines

Two-faced AI models learn to hide deception Just like people, AI systems can be deliberately deceptive - 'sleeper agents' seem helpful during testing but behave differently once deployed : r/Futurology

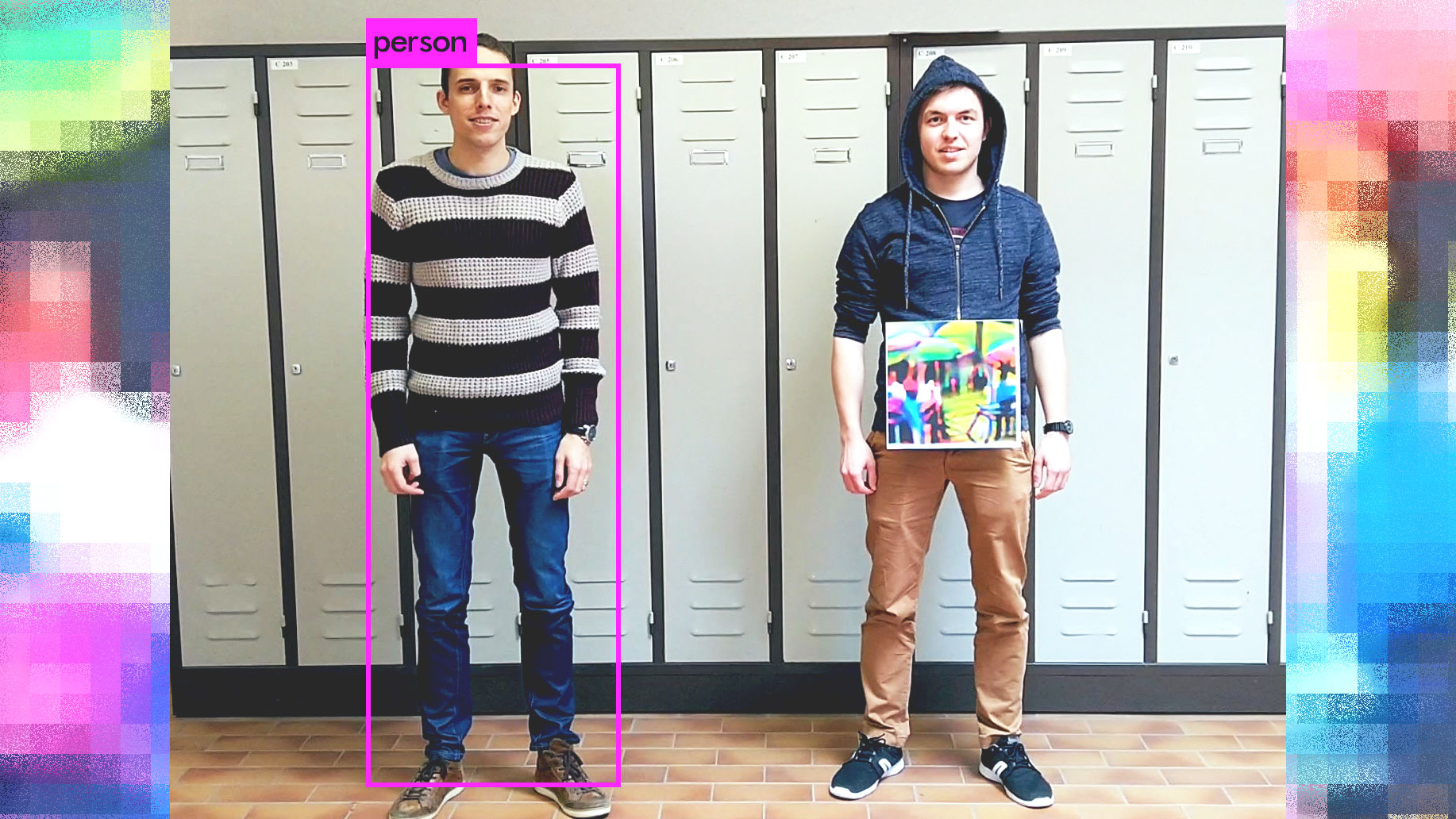

How to hide from the AI surveillance state with a color printout

Evan Hubinger (@EvanHub) / X